Analytics

Full reports of AlzPED summary and presentations can be downloaded here

AlzPED ANALYTICS SHOWCASE - EXPERIMENTAL DESIGN

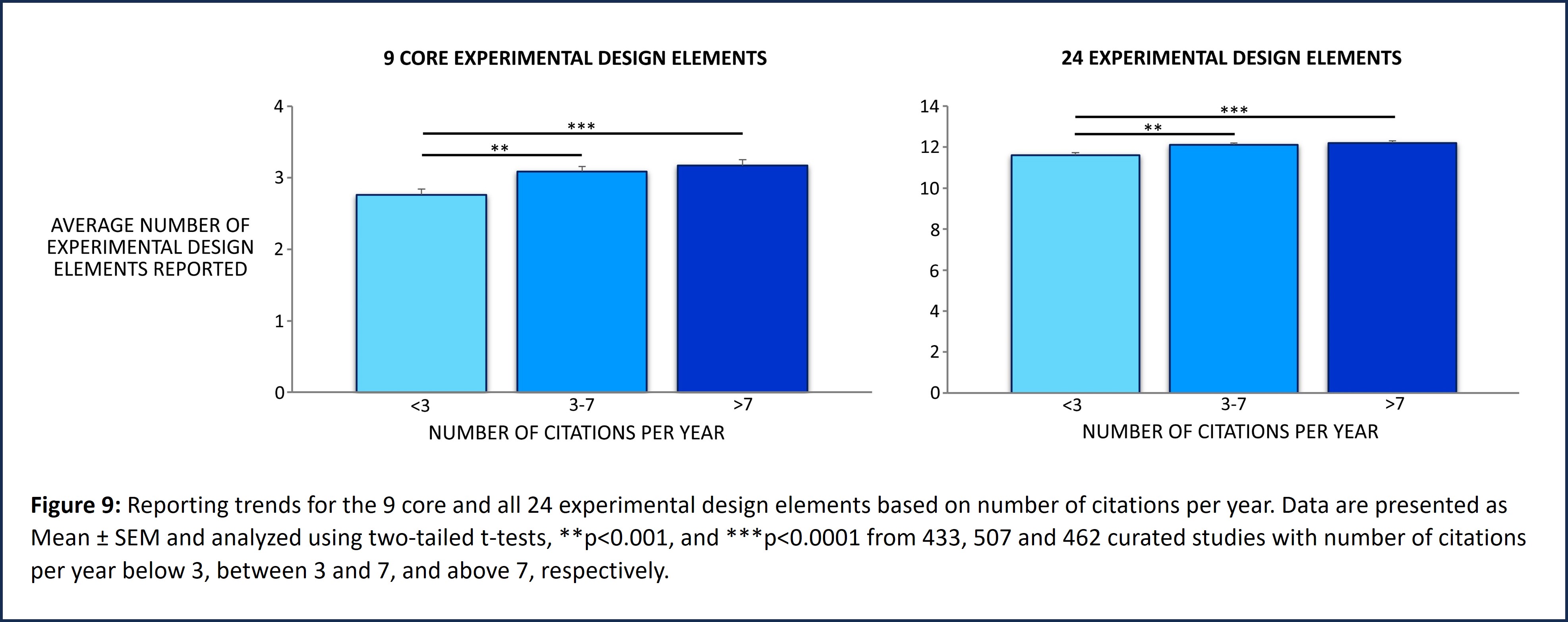

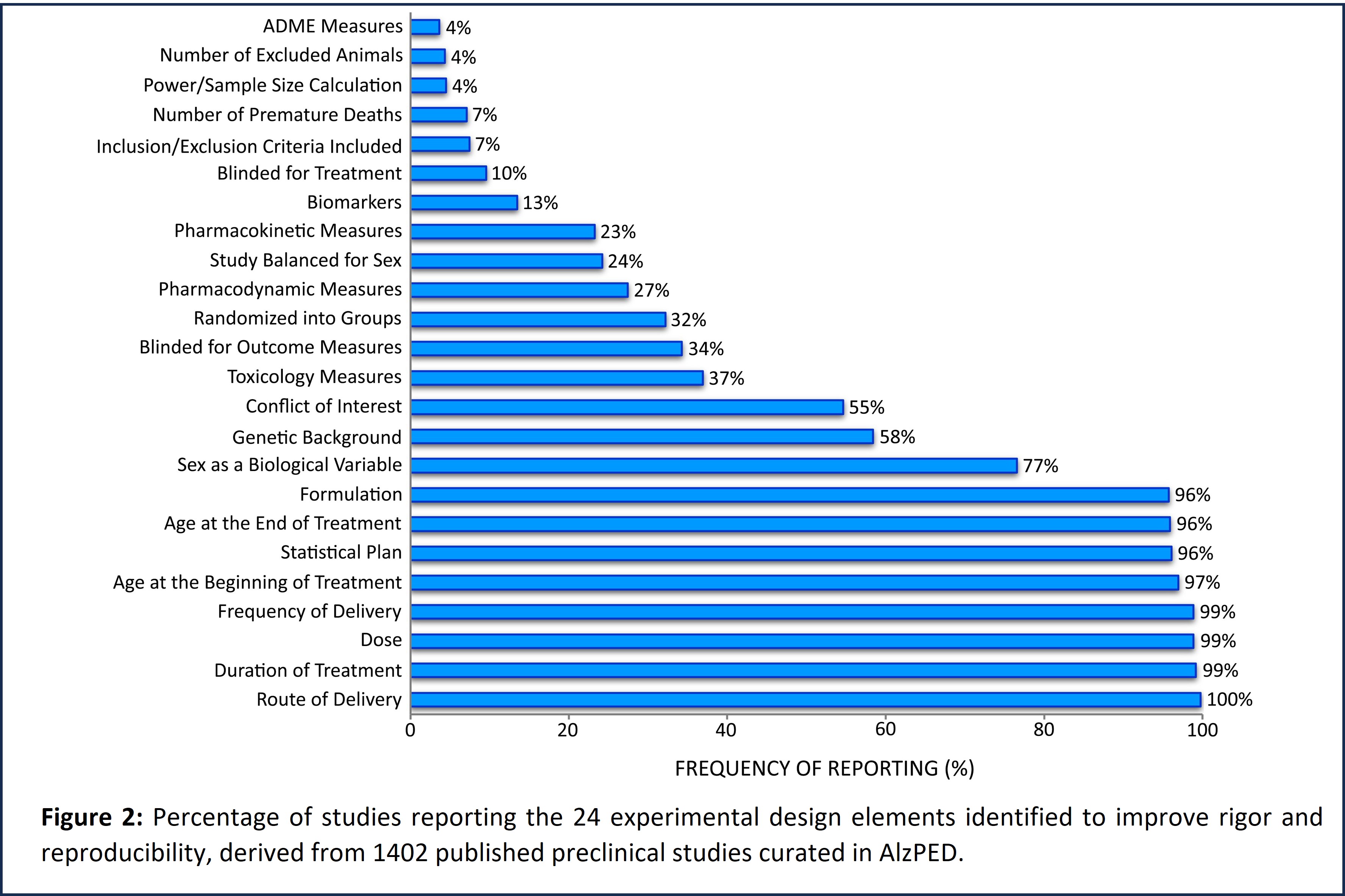

AlzPED’s Rigor Report Card (Figure 1) provides a standardized set of 24 study design elements that are specifically tailored for rigorous preclinical testing of candidate therapeutics in AD animal models. The Rigor Report Card further defines a succinct set of 9 core design elements that are critical for scientific rigor and reproducibility, without which the reliability of results cannot be assessed (Figure 3). These include design elements that report on power calculation and sample size, blinding for treatment allocation as well as analysis of outcome measures, random allocation of intervention, age, sex, and genetic background of the animal model used, and whether the study has been balanced for sex. Also included are eligibility criteria like inclusion and exclusion criteria that define which animals are eligible to be enrolled in the study, premature deaths and drop-outs, and inclusion of author conflict of interest statement. Further, design elements that characterize the therapeutic agent being tested such as pharmacokinetic, pharmacodynamic, ADME and toxicology measures, dose, and formulation of the therapeutic agent as well as well as treatment paradigms such as route, frequency and duration of administration, and plan for statistical analysis of study results are also included. These are all common elements of clinical trial study design (van der Worp et al., 2010, Shineman et al., 2011, Landis et al., 2012, Snyder et al., 2016, Percie du Sert et al., 2020). The ARRIVE guidelines provide clear definitions and examples for each study design element.

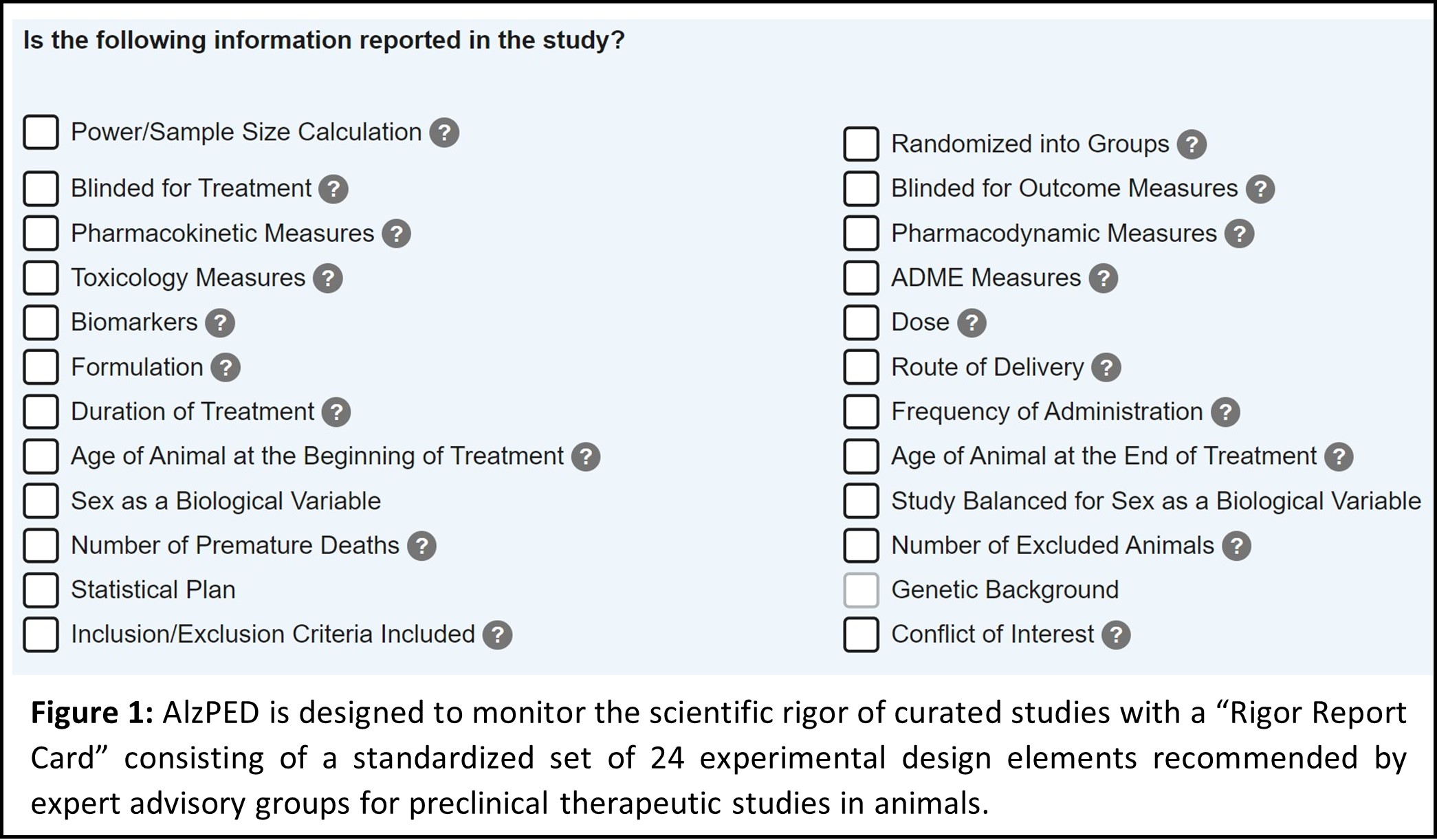

Scientific rigor of each curated study is monitored using AlzPED’s Rigor Report Card, which identifies experimental design elements reported in a study as well as those missing from the study. Evaluation of these rigor report cards demonstrate considerable disparities in reporting the 24 experimental design elements (Figure 2). Design elements like dose and formulation of the therapeutic agent being examined, treatment paradigms like route, frequency and duration of administration, age of the animals used in the study, and plan for statistical analysis of study results are consistently reported by at least 95% of the 1402 curated studies. However, critical elements of methodology like power calculation, blinding for treatment allocation, as well as analysis of outcome measures, random allocation of intervention, inclusion and exclusion criteria, and whether the study has been balanced for sex are significantly under-reported, being reported by fewer than 35% of the 1402 curated studies (Figure 3).

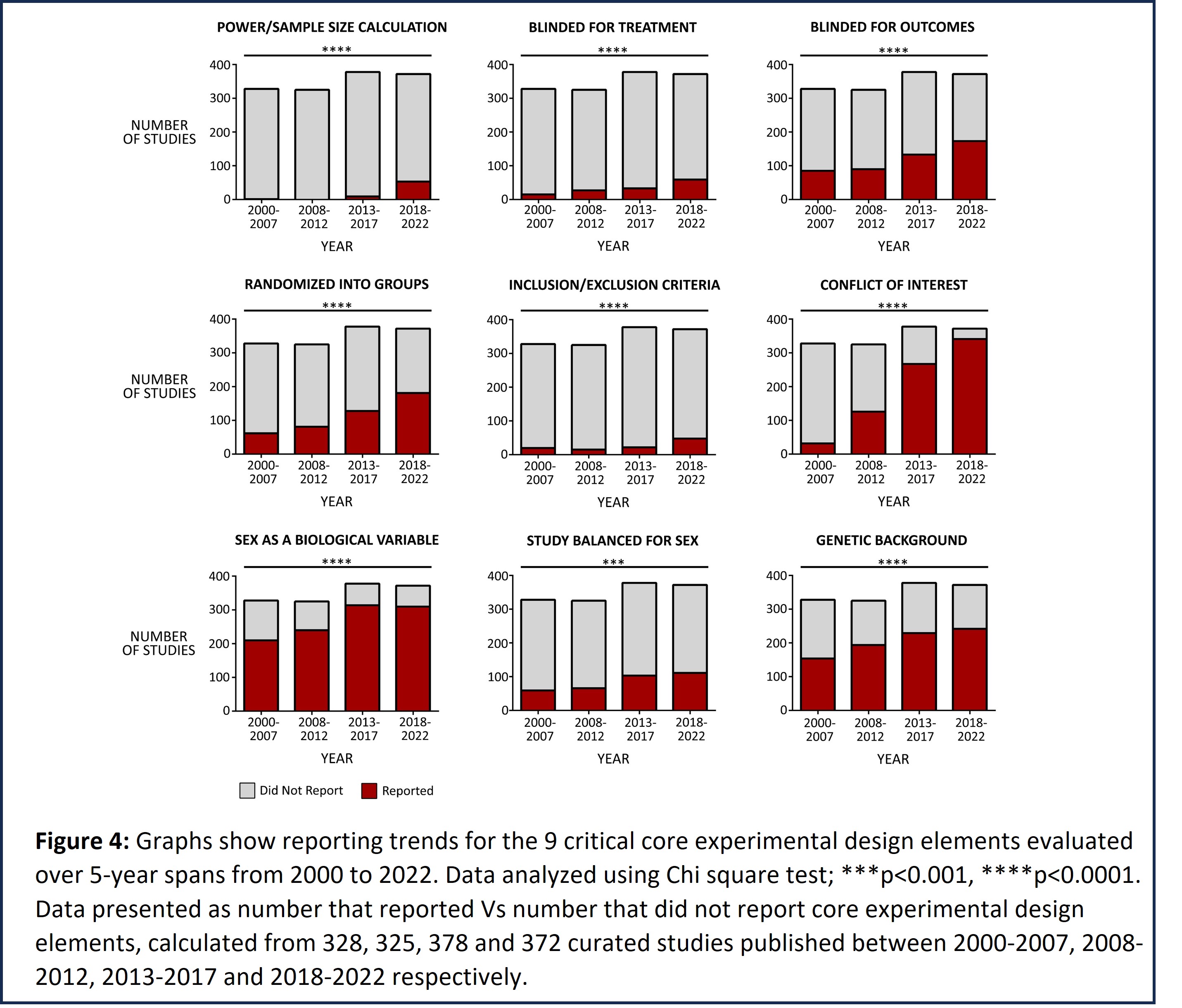

Reporting trends for the 9 critical core experimental design elements were evaluated over 5-year spans from 2000 to 2022 (Figure 4). Our analysis demonstrates an incremental improvement over consecutive 5-year spans in the proportion of studies reporting several critical elements like blinding for outcome analysis, randomized allocation of therapeutic agent, genetic background as well as sex of the animal model used in the study, and author conflict of interest statement. However, there is little improvement in the proportion of studies reporting power calculation, blinding for treatment allocation, inclusion and exclusion criteria, and whether the study has been balanced for sex. Of note, NIH-issued 2 rigor-related policies – (i) NOT-OD-11-109 issued in 2011 requires transparency in reporting financial conflicts of interest, and (ii) NOT-OD-15-102 was issued in 2015 to emphasize the consideration of sex as a biological variable. Enforcement of these policies clearly improved the reporting of these core experimental design elements.

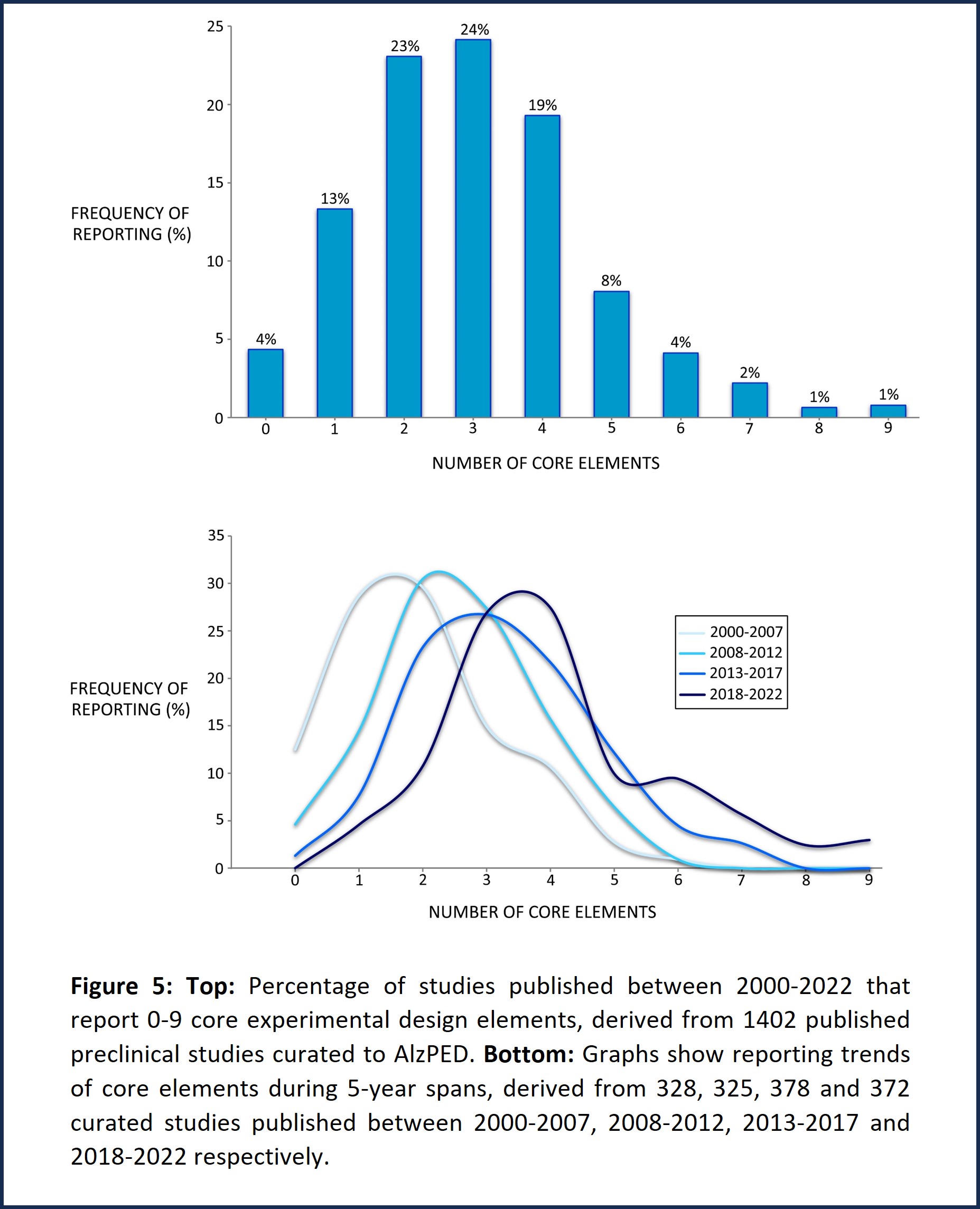

Further evaluation of the reporting trends in the 9 critical core experimental design elements demonstrates that few studies report more than 5 core design elements, most studies reporting only 2-4 core design elements (Figure 5, top). However, studies published between 2018 and 2022 report between 3-5 core design elements compared with those published between 2000 and 2007 which report 1-2 core design elements (Figure 5, bottom).

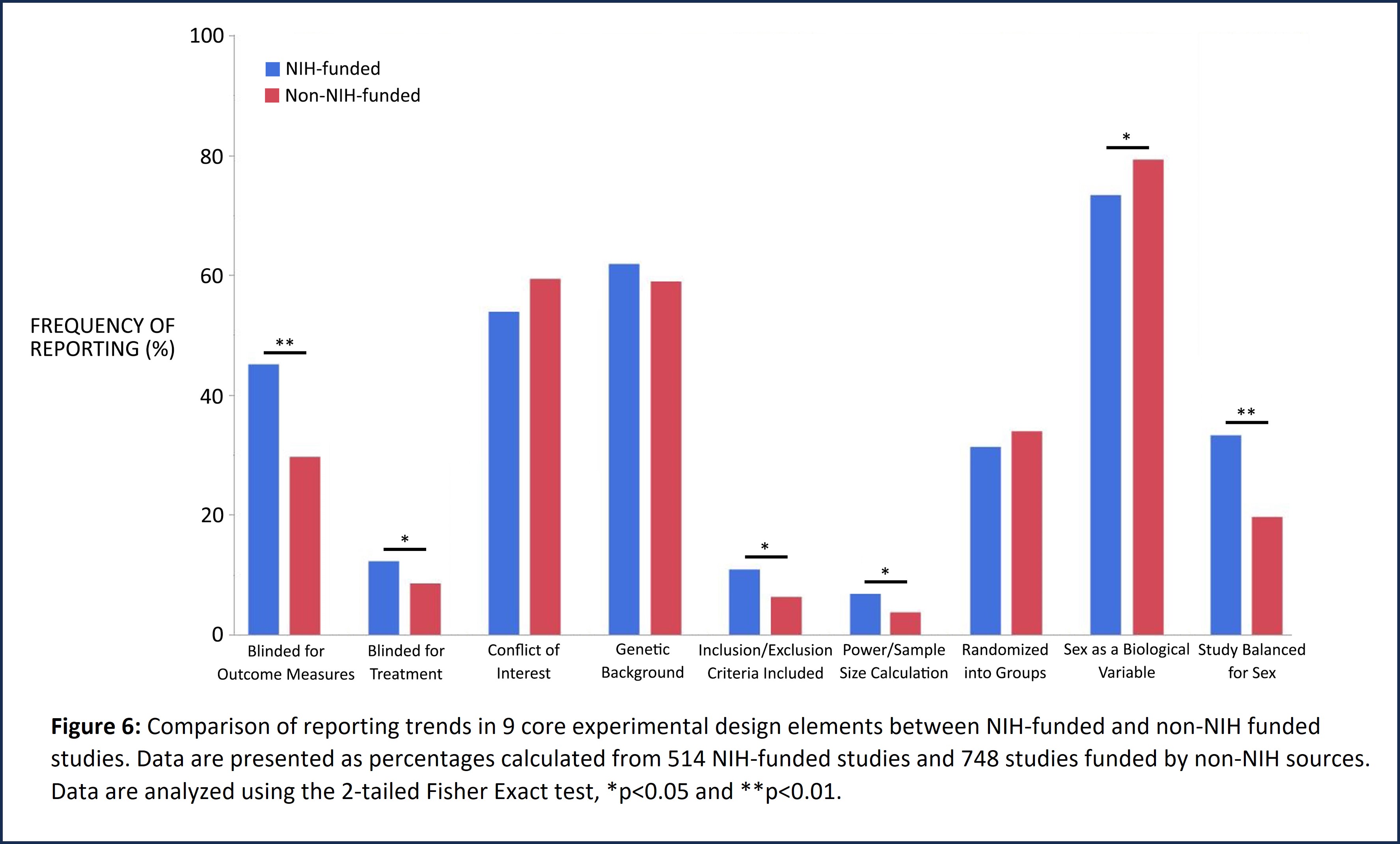

NIH-issued policies to enhance the rigor, reproducibility and translatability of research place significant emphasis on rigorous scientific method, study design, and consideration of biological variables such as sex. Analysis of study design and methodology of NIH-funded published studies curated in AlzPED demonstrates a positive impact of these policies. NIH-funded studies show significantly improved reporting of power/sample size calculation, blinding for outcome measures, inclusion/exclusion criteria and balancing the study for sex (Figure 6). Non-NIH funded studies include those funded by US federal and state organizations, nonprofit organizations, pharmaceutical companies, the European Union, non-EU European governments, governments of Canada, Mexico, Brazil, China, Japan, South Korea, India, Iran, Australia and others, and University start-ups.

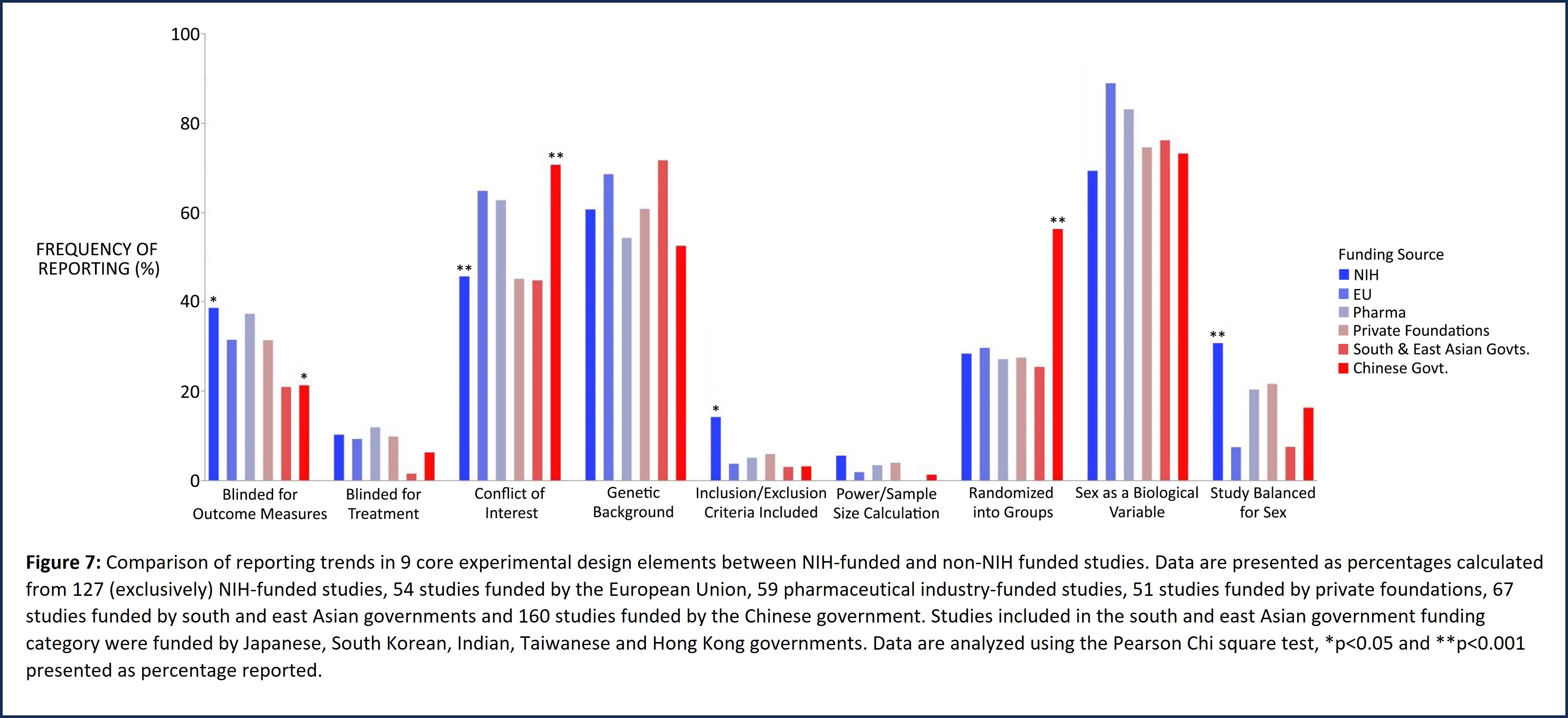

Studies funded exclusively by the NIH show significantly improved reporting of inclusion/exclusion criteria and balancing the study for sex (Figure 7) compared with those supported by specific non-NIH funding sources that include the European Union, nonprofit organizations, pharmaceutical companies, governments of China and other south and east Asian countries (Japan, South Korea, India, Hong Kong and Taiwan).

The lack of rigor and reproducibility of research findings in the scientific publishing arena is well documented. A systematic review of the rigor and translatability of highly cited animal studies published in leading scientific journals (including Science, Nature, Cell, and others) demonstrated a lack of scientific rigor in study design. A comprehensive review of reporting trends for critical experimental design elements in highly cited studies published in leading journals that are curated in AlzPED revealed a similar pattern of poor study design and reporting practices. These analyses are described in greater detail in the next segment.

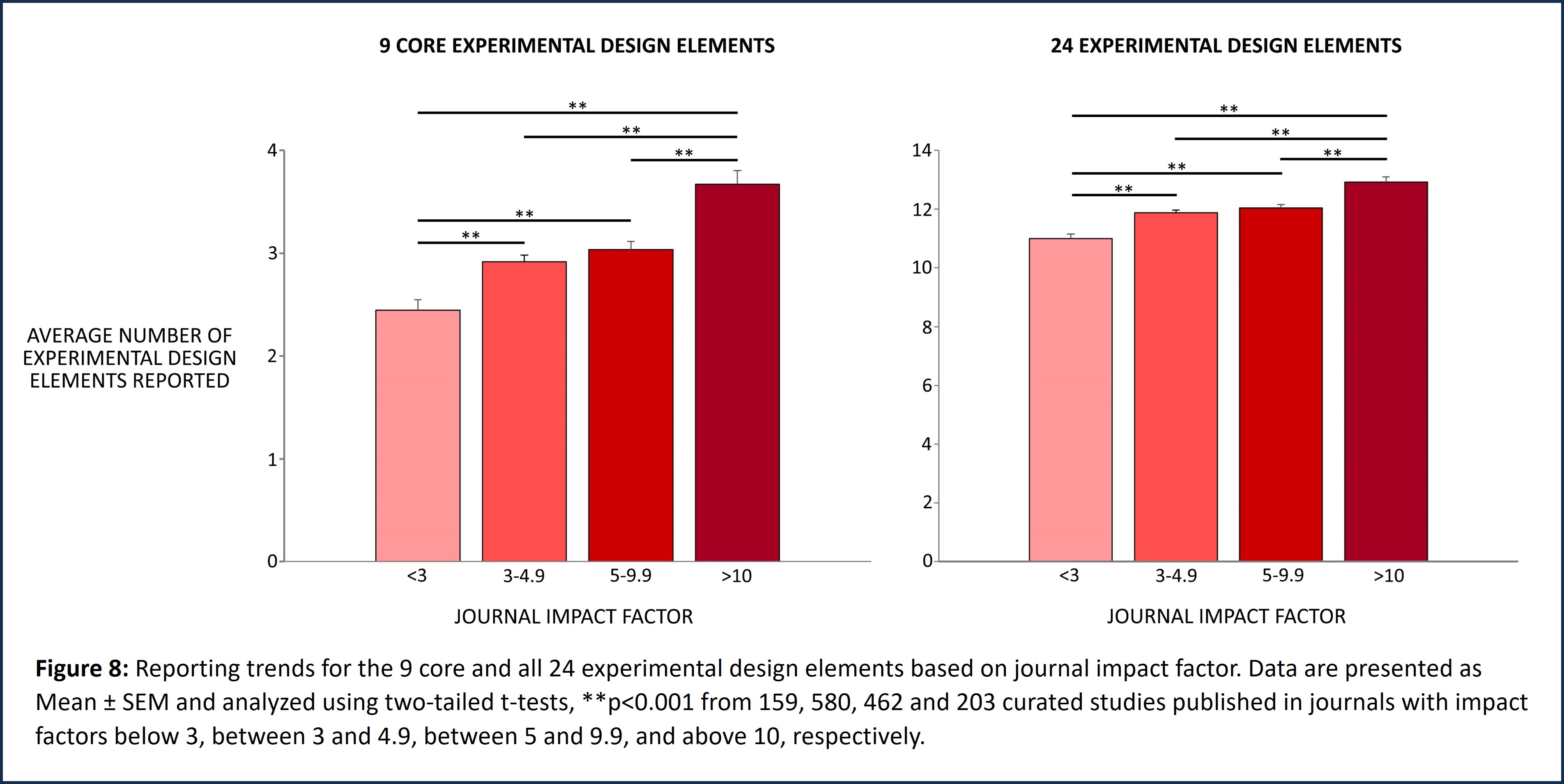

Reporting trends for the 9 core and all 24 experimental design elements were evaluated based on the impact factor of the journal in which the curated preclinical study was published (Figure 8). These studies were categorized into 4 groups based on 2021 journal impact factor values. Curated studies published in journals with impact factors below 3 were sorted in Group 1, and those published in journals with impact factors between 3 and 4.99, or between 5 and 9.99 were sorted in Groups 2 and 3 respectively. Studies published in high impact journals with impact factors greater than 10 were sorted in Group 4. While t-tests show that there are statistically significant differences in reporting the 9 core elements as well as all 24 elements of experimental design between these four groups, overall, the data demonstrate poor reporting practices irrespective of journal impact factor.

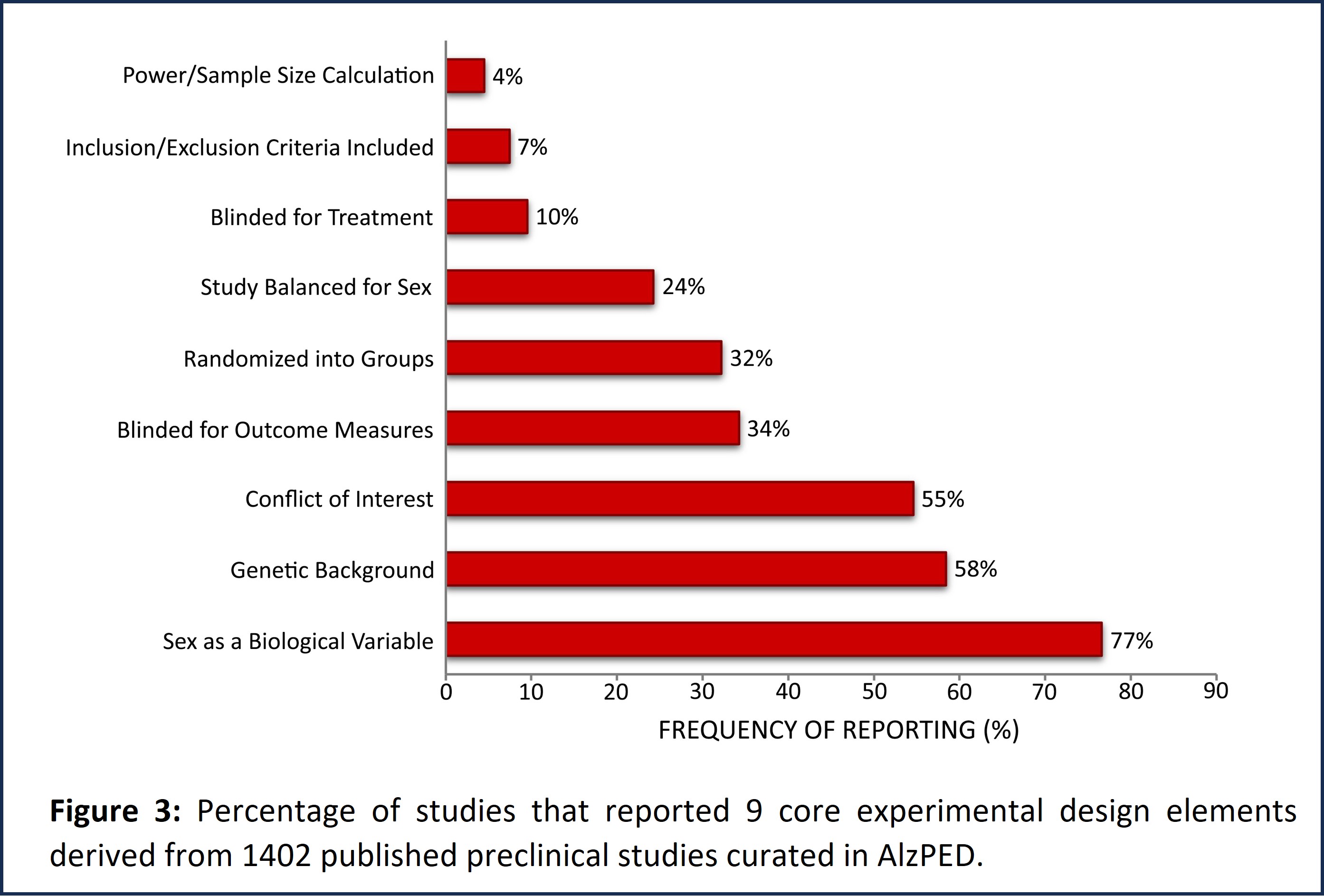

Reporting trends for the 9 core and all 24 experimental design elements were evaluated based on the relative number of citations per year of each curated study published between 1996 and 2021 (Figure 9). Relative number of citations for each curated study was calculated by dividing the total number of citations for that study by the number of years since publication. For example, for a study published in 2020, the total number of citations for that study was divided by 2, or for a study published in 2019, the total number of citations for that study was divided by 3, and so on. These studies were categorized into 3 groups based on the relative number of citations per year. Curated studies with less than 3 relative number of citations per year were sorted into Group 1, those with relative number of citations per year between 3 and 7 or those with relative number of citations per year greater than 7 were sorted into Groups 2 and 3 respectively. While t-tests show that there are statistically significant differences in reporting the 9 core elements as well as all 24 elements of experimental design between these three groups, overall, the data demonstrate poor reporting practices irrespective of relative number of citations per year.